This is the sixth commentary in the series “Defining Zero Trust Security.”

A brief history of “the way we’ve always done it.”

Legacy network security works. Well, network security worked, once, a long time ago. Most of the time. In the 1970s and early 1980s. Until suddenly it didn’t.

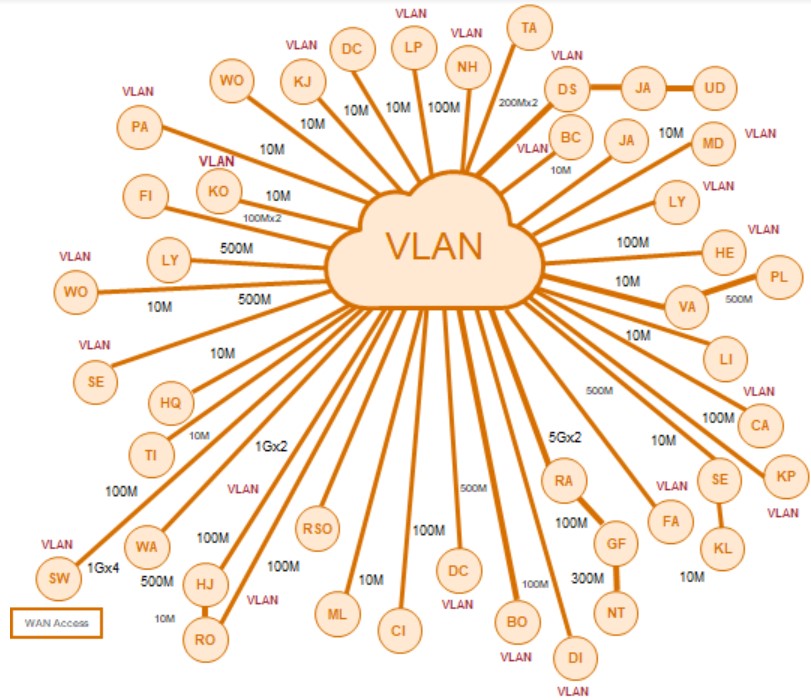

Back in the day, network architecture was of a “hub-and-spoke” design: Destination applications (on a mainframe, perhaps) were attached to a central network, with that network often represented as a small ring in the center of the metaphorical bicycle wheel. Individual users resided at the end of each of the many spokes. The more users, the greater the network complexity, and the more endpoints to secure.

Figure 1. Traditional hub-and-spoke architecture.

Connection was to the network itself. Users came into the office and logged on to the corporate network to connect to applications housed in a data center, or often, in a server stack situated within the confines of that same building. Users logged in with a password, navigated the path to their needed application (“cd e:/hrapps”), and went about their work.

Thirty years ago, we would build applications and put them in the data center (probably on a mainframe housed in the data center). For employees needing centralized computing resources, the data center became the center of gravity for the organization. As computer-based work expanded, so did the reach of the network, incorporating connections for users at other locations, with extensions often accommodated via wide-area-network (WAN) installations. More branches meant more users, and greater complexity, cost, and (literal) length of the physical network to administer and secure.[1]

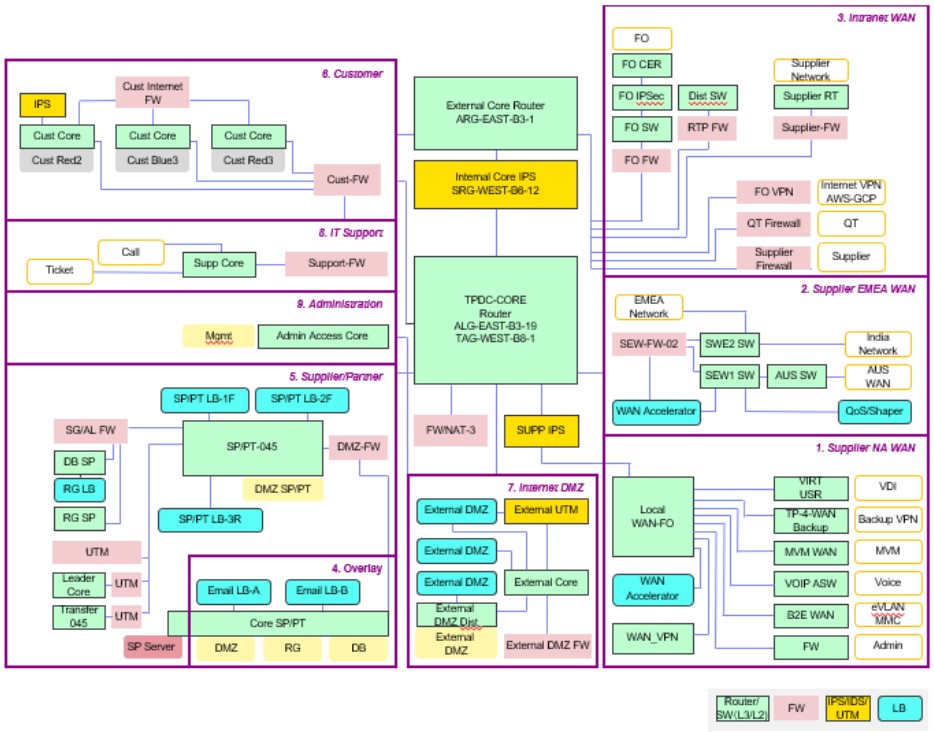

Network security of the time aimed to keep the bad guys outside the castle walls. To accomplish that, IT administrators employed a so-called “castle-and-moat” security architecture: A metaphorical moat -- in reality, a perimeter firewall -- encircled the outside of the physical network. Those administrators deployed routers, switches, firewalls, UTMs, and load-balancers to protect the network from malicious-data ingress or asset-data egress. The firewall’s “drawbridge” was the only way into the corporate castle, or more precisely, onto the corporate network. Access was to the (allegedly) secured network, and not, say, to a specific destination resource. With a user ID and password, anyone could log on and gain access to any system attached to the corporate network.

Figure 2. Traditional castle-and-moat security in all its glory

The model made sense back in that era. Traditional network security was designed for a 1970s way of work: on-premise and without access to external resources beyond the data center. Security was governed by physical machines stacked at the gateway. Access to the network was as secure as the lock on the front door of headquarters and the complexity of what was probably an eight-ASCII-character-max user network password.

Legacy security models don’t support the new way of work

Network security may have been appropriate for the business operating models of a bygone era. But no one works that way anymore. Today, much work is performed outside the enterprise’s physical walls and network perimeter. It’s a hybrid way of work: Employees work in the cloud, on the internet. They use their own devices. They connect from anywhere -- France, Bakersfield, home office, Disney World, airplanes, Starbucks, the office down the hall.

Those users expect fast performance regardless of physical location. But a traditional hub-and-spoke network topology cannot deliver it. In a hub-and-spoke environment, data travels an indirect path, a path made further convoluted when the user is accessing the corporate network in a distant location. Traffic is backhauled from initiation endpoint to a distant security gateway -- literally a stack of appliances, probably housed in a data center or even an IT closet at HQ -- for security-processing. With its linear data-processing, that stack bottlenecks traffic, acting like a single tollbooth processing vehicles merging on a zillion-lane freeway. And the extra distance data must travel adds additional performance-affecting lag. (Imagine flying from Miami to New York with a “quick” stopover in Tokyo.)

The new hybrid way of work -- which, let’s be honest, isn’t that new, but has received more attention thanks to the pandemic -- doesn’t just tax traditional network and security architectures. It breaks them. Traditional infrastructure attempts to protect access to the network via perimeter-based security. But how do you secure a perimeter that includes the open internet?

You can’t and you don’t.

Not that legacy-infrastructure-burdened IT administrators don’t try. To counter threats, they stack appliances at the metaphorical drawbridge, lengthening linear security-processes (which impacts performance, adds (considerable) maintenance complexity, and increases operating costs (dramatically).

Today, that approach doesn’t work. Every network node -- and there are many more of them -- is a possible cyberattack vector. The further the network spokes stretch, the more targets, the greater the attackable surface area for hackers, and the greater the vulnerability.

Extending legacy network and security infrastructure to the cloud only weakens corporate threat posture. At the gateway, the volume of data is simply too great: Packet volumes easily overwhelm traditional security appliances intended to inspect incoming and outgoing data. Add to that the threat represented by encrypted malware code. Traditional hardware-based security simply cannot keep up. The new way of work renders it impotent.

[1] Increased network complexity and the increased threat risk that accompanies it remain an ironic paradox of corporate success: Grow the revenue, add more branches, stretch the network, duplicate hardware, extend attackable surface area, expose more IP addresses to the outside world, and subsequently invite the attention of cybercriminals.

Related Content

The history, context, and co-opting of zero trust

So what exactly is it? The definition, context, and eventual practicality of zero trust

Zero trust’s impact: data-centric, scalable, practical, and secure